A container is a special sandboxed environment that encapsulates an application along with all of its dependencies. Once an app is packaged into a container, it interacts only with the system kernel and is otherwise completely isolated from all other processes and applications. In other words, container technology allows programs to be abstracted from the environment in which they are run.

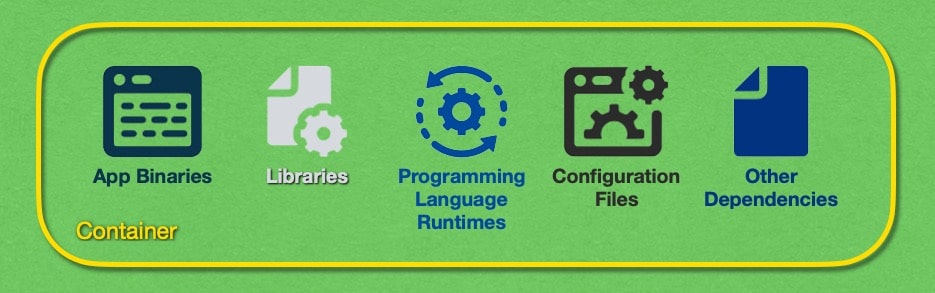

This abstraction dictates that each containerized application must be as self-sufficient as possible. To this end, containers include not only the program’s binaries, but also all other libraries, programming language runtimes, configuration files, and other dependencies that the application needs to run properly.

By bundling all dependencies along with the app itself inside a single container, DevOps teams are essentially creating entire application runtime environments that are standalone, portable, and isolated from the rest of the system through virtualization. Such containerized applications can be rapidly deployed on external systems with minimal risk of unexpected errors.

Containers achieve this rapid deployment by keeping their size as low as possible. In fact, larger applications are often broken down into microservices that are packaged in separate containers. A microservice is a standalone portion of an application that takes some input, processes it, and generates some output that is sent back. Examples of microservices include the database controller, user authentication, a session manager, and many others.

By breaking down large projects into microservices and then containerizing them, you can spread the containers across multiple physical computers for processing, thus ensuring the application’s stability. It is even possible to selectively power up containers only when there is a pending task for the respective microservice. Once the microservice has carried out all pending tasks, the container can be powered down again. Such just-in-time container management can be very efficient in terms of hardware utilization.

Resource efficiency is one of the core principles behind container technology. That is why each container virtualizes and packages only the binaries, libraries, and frameworks needed to run the application. This stands in stark contrast to virtual machines that store a full-fledged operating system in addition to any applications installed on top of it.

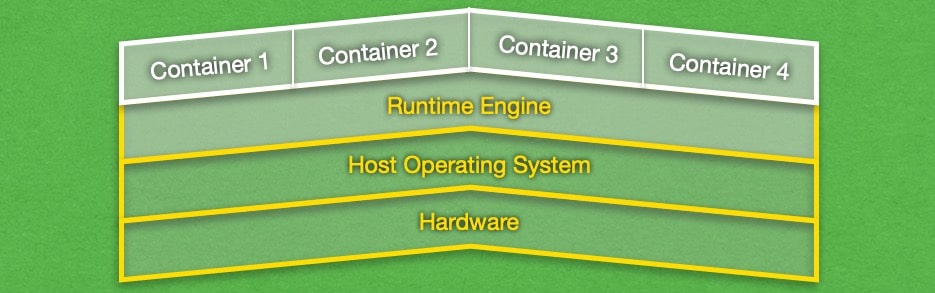

So if a container lacks an operating system, how can it execute the packaged application? The answer is that each container runs on top of a host operating system, much like virtual machines that are powered by a Type-2 hypervisor. However, unlike virtual machines, containers use the host operating system’s kernel for program execution. Doing so brings increased performance and less overhead when compared to VMs. You can read our comparison between virtual machines and containers to learn more about how the two technologies differ from one another.

The performance gains come from the fact that the host OS kernel runs directly on the server hardware. In contrast, virtual machines use a virtual kernel which then needs to pass along any tasks for execution to the underlying host operating system. By skipping this extra step, containers are more responsive and can perform more tasks in the same amount of time.

Similarly, the use of the host OS kernel means that containerized applications do not need to virtualize and package their own kernel as VMs do. Less code means lower container size which, in turn, translates to faster boot-up times.

Container performance can be improved even further by using special host operating systems that have been designed to be small, lightweight, and stripped of any unnecessary components. Examples of such operating systems include Rancher, VMware Photon, Microsoft Windows Nano, CoreOS, SUSE MicroOS, and others. In addition to better performance, these tiny operating systems also deliver blazingly fast boot times (usually under a second) and fewer attack vectors for hackers to exploit.

Containerized applications are executed through a runtime engine that runs on top of the host operating system. The runtime engine is also used for the overall container management, such as starting and stopping containers, adjusting container-specific settings, and load balancing the containers that are in use. The most popular runtime engine by far is Docker. Other runtime engines include rkt, Hyper runV, and LXC/LXD.

Container technology can be used for virtually every type of Internet hosting – from web hosting, to application hosting, to data processing, and many others. That said, containerization brings the most benefits to projects that are too demanding for a single server to handle as well as for websites and services that can have vastly different computing requirements from one moment to the next. That is why some of the largest companies in the tech world like Google and Netflix use container technology to power their services.

Since each container is designed to perform a small part of the overall task, popular applications and websites often employ thousands or even millions of containers at the same time. As you might imagine, manually managing that many containers through the command line are not feasible due to the sheer volume of available containers. That is where cloud orchestration comes in.

Cloud orchestration software like Kubernetes manages the container runtime environment so you don’t have to. Through automation, you can set up the orchestration software to regularly check the health of all containers and automatically reboot any faulty ones. Moreover, orchestration software works great if your performance needs vary with time as the software can add new containers when it is necessary and scale back when the usage levels drop.

In addition to load balancing, orchestration software makes it a breeze to update the software of the existing containers. This is most commonly performed through a version control system like git. Pushing out updates and rolling back changes is simple and often takes just one command.

To learn more about container technology, continue reading or jump to the section that interests you:

- Advantages

- Disadvantages

- What Types of Containers Are There?

- What Apps Can I Run in Containers?

- Where Can I Run My Containers?

- What Is Cloud Orchestration?

- Containers vs Virtual Machines

- What Is Container-as-a-Service?

- Conclusion

Advantages

Container technology has a lot going for it. It sits on the bleeding edge in the online world and has numerous advantages over traditional hosting methods. Below, we will list some of the reasons why using containerized applications might be a good idea:

- First and foremost, cloud containers are very resource-efficient because they use the host operating system’s kernel. As a result, they consume a much smaller amount of memory when compared to virtual machines.

- Another big advantage of container technology is that containers package the entire software stack needed to run a specific application. So the change of running into odd errors due to OS discrepancies between various servers is minimal.

- Thanks to their small size, containers can boot up in just a second. This allows for auto-scaling to be implemented where new containers are started automatically during traffic surges. This load balancing technique ensures the high availability of the website or application even during peak utilization.

- Very large projects can also take advantage of cloud orchestration software that automatically scales the number of active cloud containers.

- Containerized applications are often deployed on specialized operating systems that are designed to power containers. These operating systems are very small in size since they do not contain any software or frameworks that would not be used by containers. Consequently, such operating systems can be started in under a second and are fairly secure when compared to their desktop counterparts.

- The entire containerization model has been developed with microservices in mind. Microservices allow large applications to be broken down into smaller independent units that take some input, process it, and return some sort of output. It is common to encapsulate one microservice per container.

- Containerized applications can be easily migrated from one physical computer to another. That’s because each container already holds all of its settings and dependencies. So the migration just consists of copying the container files onto the new machine. There is little to no additional setup required.

- Speaking of hardware, containers can be easily deployed on a wide range of machines such as personal computers, servers in a data center, and the public or private cloud.

- Due to their modular nature, container technology supports rolling updates for system patches. In other words, your website or application will remain fully operational while all of the containers are updated in small batches. In theory, this should allow you to perform system maintenance while maintaining 100% uptime.

- There is also full support for version control such as Git. This allows new updates to be pushed via a single command and rollbacks can be easily performed as well.

- Most container-as-a-service companies have adopted a pay-as-you-go pricing model where you pay only for the server resources that you have actually used.

Disadvantages

Cloud containers can provide you with a lot of flexibility and great performance, however, there are some drawbacks to using containerized applications as well. Below, we will list the most notable issues with this technology:

- Container technology is arguably a better long-term solution than using virtual private servers, however, it is also more complex. Creating an elastic cloud platform that can scale along with the application’s resource needs adds a lot of complexity and requires advanced skills and knowledge to set up.

- Another drawback to consider is that container hosting plans are generally more expensive than the equivalent VPS package.

- Containers are not suitable for monolithic applications. A monolithic application is a program where every part of it communicates and depends on all other parts. Such applications cannot be broken down into microservices which is why cloud containers are not a good fit.

- Some people believe that container services are less secure when compared to VPS hosting. That’s because if the host OS gets hacked, the attacker will have access to all containers running on top of it. While technically the VPS hypervisor can also be hacked, it offers a much smaller attack surface for hackers and hence it is deemed more secure.

What Types of Containers Are There?

Although container technology hasn’t been around for that long, there are already many different types of containers and container runtime engines on the market. Usually, a runtime engine will either conform to the Open Container Initiative (OCI) standard, or it will use a proprietary container format. You can think of the various runtime engines as computer programs and the container formats are the different file formats that they can open and interact with.

Currently, the most popular container technology is the Docker container which uses the Docker Runtime Engine. It is also one of the founding members of the Open Container Initiative and as such it is OCI-compliant. Its main competitors are CoreOS rkt, LXC, LXD, OpenVZ, Hyper runC, Kata Containers, and Linux-VServer.

While containers have only recently seen widespread adoption, a similar technology that silos and isolates applications from the surrounding environment have been in existence for more than a decade. If you have had prior experience with Wine bottles in Linux, FreeBSD jails, Solaris Containers, or AIX Workload Partitions, then you already have a good idea of what a container is and how it works.

In fact, here at AwardSpace, we have been using container-like technology on our shared hosting platforms for nearly twenty years. So when you buy a premium shared hosting plan, purchase a semi-dedicated server, or even just get a free hosting package, your account will be placed in a container-like construct that is completely isolated from the rest of the system and any other clients that may be using the same server. This isolation provides an increased level of security and privacy for your hosting account.

What Is Docker?

Docker is an open-source containerization utility that is available for Windows and Linux. It is currently one of the most popular container technologies on the market and comes with both free and paid licenses.

Docker containers use a minimal amount of system resources and can be deployed rapidly to scale with an application’s computational needs. Moreover, each container has its own isolated runtime environment that does not communicate with external sources by default. Docker can support millions of active nodes which is why it is often used to operate hardware partition provisioning in large enterprises and entire data centers.

Docker containers are actually part of a larger suite of Docker software. The suite also includes the Docker Runtime Engine, Docker Swarm, and the Docker Disk Repository. When combined, these four tools form a comprehensive solution that can manage even the most demanding projects.

The Docker Swarm is a container orchestration tool allowing you to scale your number of Docker containers in real-time in accordance with the computational needs of your project. The Docker Runtime Engine, on the other hand, runs on top of each host operating system and enables fine-grained control over the Docker instances.

Another great thing about Docker containers is that they integrate nicely with many popular developers and DevOps tools like Git, Subversion, CVS, Jenkins, Puppet, Vagrant, Helm, Deis, and Ansible. In fact, it is common to use a version control system like Git to push out updates to your Docker containers.

What Apps Can I Run in Containers?

Container technology is most often used by large corporations to deploy cloud-based applications that are capable of handling massive amounts of traffic. These applications can be consumer-oriented or they may be internal company systems. Organizations that commonly use cloud containers for their web-based and mobile applications include big tech and media companies, government institutions, large investment groups, and banks.

Many large corporations often have internal programs that have been in existence for decades. When these programs are rewritten and modernized, they often take the form of containerized applications that can be deployed in the cloud instead of an in-house data center. By rewriting the legacy programs to be cloud-native, corporations can leverage the elasticity, scalability, and stability that come with cloud hosting.

Overall, nearly all applications can be adapted to use container technology and run in the cloud. The only real limitation is that the programs must be able to be broken down into microservices so that each microservice can be packaged into a container. As such, monolithic applications cannot be containerized that easily and would need to be completely rewritten.

Where Can I Run My Containers?

One of the great advantages of containers is that they can be run essentially everywhere. Since container technology decouples your code from the hardware that executes it, your containerized application can be run on every hardware configuration. For example, you can use your personal laptop to run your containers while you are still developing them and once they are complete, you can move them to a private server in a colocation facility. The containers will “just work” on the new machine since they were never hardware-dependent, to begin with.

In fact, since container technology completely decouples the software from the underlying hardware, you can get a virtual private server and run your containers inside of it. And, of course, containerized applications run great in the cloud no matter whether it’s public, private, or hybrid.

Containers are also supported on all major operating systems like Linux, Windows, and macOS. However, it should be noted that each container is OS-dependent. In other words, you cannot take a Windows-based container and run it on a Linux server.

Speaking of operating systems, containerized applications are most often run on stripped-down operating systems that are purpose-built to power containers. That is because a regular OS is too bloated and full of unnecessary features when you just want to run containers on top of it. By using these smaller OS variants, companies are able to optimize performance, enhance security, and improve system stability.

Popular operating systems that are designed to power containers include Microsoft Windows Nano, VMWare Photon, RancherOS, Core OS, and Ubuntu Core. Some of these operating systems are open-source and can be freely used, while others are proprietary and are offered as part of a larger service for running containers.

What Is Cloud Orchestration?

Large companies often run massive web and mobile applications that require hundreds or even thousands of containers to power them. When you are dealing with that many containers, it is no longer feasible or practical to manually manage them. That is where cloud orchestration comes in.

There is a special kind of software called a container orchestrator that is built specifically to manage your cloud containers so you don’t have to. A container orchestrator can build and deploy new containers when your app utilization increases as well as retire containers when they are no longer needed. Each container is provided with a virtual IP address and its health is checked regularly. If a container hangs or freezes, the cloud orchestrator will detect that and reboot the container.

Orchestration software also comes with a sophisticated update mechanism where containers are patched and upgraded a few at a time. These rolling updates allow most of the containers to remain online, thus ensuring 100% uptime even during updates.

Just about the only downside of using cloud orchestration tools is the fact that they add quite a bit of complexity to the application deployment. As such, container orchestrators are still predominantly used in large-scale applications where the effort it takes to set up and manage the orchestration tool is less than the time needed to manually manage each container.

Orchestration software can be open-source and provided free of charge or you may need to pay a licensing fee to use it. Some of the more popular cloud orchestration packages include CoreOS Tectonic, Docker Swarm, Marathon, Apache Mesos, and Kubernetes. We will take a closer look at Kubernetes in the next section.

What Is Kubernetes?

Kubernetes, also known as k8s, is the most popular cloud orchestration platform on the market today. Originally developed by Google and later open-sourced, this container orchestrator is able to deploy, scale, and manage your containerized applications according to their current utilization. This results in improved efficiency, higher availability, and less time that your DevOps team needs to spend on maintaining all deployed containers.

A very popular combination is to use Docker containers and the Kubernetes orchestrator in tandem. In addition to Docker compatibility, Kubernetes also features service health monitoring, deployment on both virtual and physical hardware, automatic rollouts and roll-backs, and autonomous scaling of services to accommodate the current traffic.

Containers vs Virtual Machines

Containers are often compared to virtual private servers since they both use virtualization technology. But while a container only packages the application it needs to run, a virtual machine includes a full-fledged operating system as well. This difference in packaging has noticeable performance and security implications which we will explore below.

Overall, containers are considered to be more efficient and better performing than virtual private servers. This is because there is less overhead with containers that run directly on top of a host operating system and use its kernel. As a result, containers are very small in size and can be started in under a second.

VMs, on the other hand, each pack their own operating system and rely on a hypervisor to communicate with the server hardware. This makes virtual machines more bloated and slower since they not only have to load more processes but also need to go through the hypervisor, an intermediary step, in order to execute computing tasks. The end result is a virtual system that may take a minute or even longer start and fully initialize all OS components and applications.

Container technology also has the upper hand when it comes to elasticity. Containerized applications are much better at scaling to accommodate fluctuations in traffic and computational needs. This is because each container is built as a microservice and you can easily add more containers in order to expand the processing capability for that particular microservice. Virtual private servers, on the other hand, have a set amount of computing resources available to them and it may take some time for additional resources to be added to them.

Security is another important aspect to consider. When a hacker tries to exploit a virtual private server, they will try to find a vulnerability in the hypervisor that powers the VPS. Conversely, if the hacker’s target is a collection of containers, then they would try to hack into the host operating system that is powering the containers. While container-optimized operating systems are very small in comparison to their desktop counterparts, they still have a lot more features and processes, and hence a lot more attack surface, when compared to a VM’s hypervisor. As such, virtual machines are considered to be more secure than container technology.

While many times containerization and virtual private servers are seen as competing technologies, they are, in fact, complementary. That’s because you can run containers inside of a VPS. Doing so increases the isolation of the containerized application and improves security.

You can read our full article on how a VPS compares to a container to learn even more about the differences between these two technologies.

What Is Container-as-a-Service?

Container-as-a-Service, or CaaS, refers to a new web hosting option that is built around container technology. Rather than renting entire virtual or physical servers, you are provided access to containers that you can use to run your website or application.

CaaS sits in the middle between Infrastructure-as-a-Service (IaaS) and Platform-as-a-Service (PaaS). However, IaaS and PaaS use hypervisor-based virtualization while CaaS exclusively employs containers. Due to this heavy reliance on containers, Container-as-a-Service solutions feature a sophisticated cloud orchestrator like Kubernetes that allows the efficient management of containers at scale.

This increased network complexity and the relative novelty of CaaS make it a more expensive option than most VPS plans. That said, Container-as-a-Service providers utilize a pay-as-you-go pricing model, thus ensuring that you pay only for the resources that you have used. This pricing model can enable even small and mid-sized businesses to use the same network infrastructure that big tech companies also utilize.

Conclusion

While still a newcomer, container technology shows a lot of promise as a highly efficient web and application hosting solution. When you use containers, you can get more out of your hardware than if you were to use virtual private servers. That said, containers are more difficult to manage and introduce a lot of network complexity. Due to this reason, the use of container orchestration tools is highly recommended as these tools are able to automate a large portion of container management.

Overall, containers have proven that they are a viable hosting solution at any scale. Any business, regardless of its size, is able to benefit from container technology. Container-as-a-Service products are usually charged on a pay-as-you-go basis, enabling everyone to pay only for the resources that they have used.

Containers are often at odds with virtual private servers and the two technologies are often compared because they are both based on virtualization. But while virtual machines employ virtualization at the hardware level, containers do so at the OS level. Moreover, the two technologies are not incompatible. Running containers inside lightweight virtual private servers have gained popularity in recent years and show how you can gain the benefits of both hosting technologies while mitigating their drawbacks to a large extent.